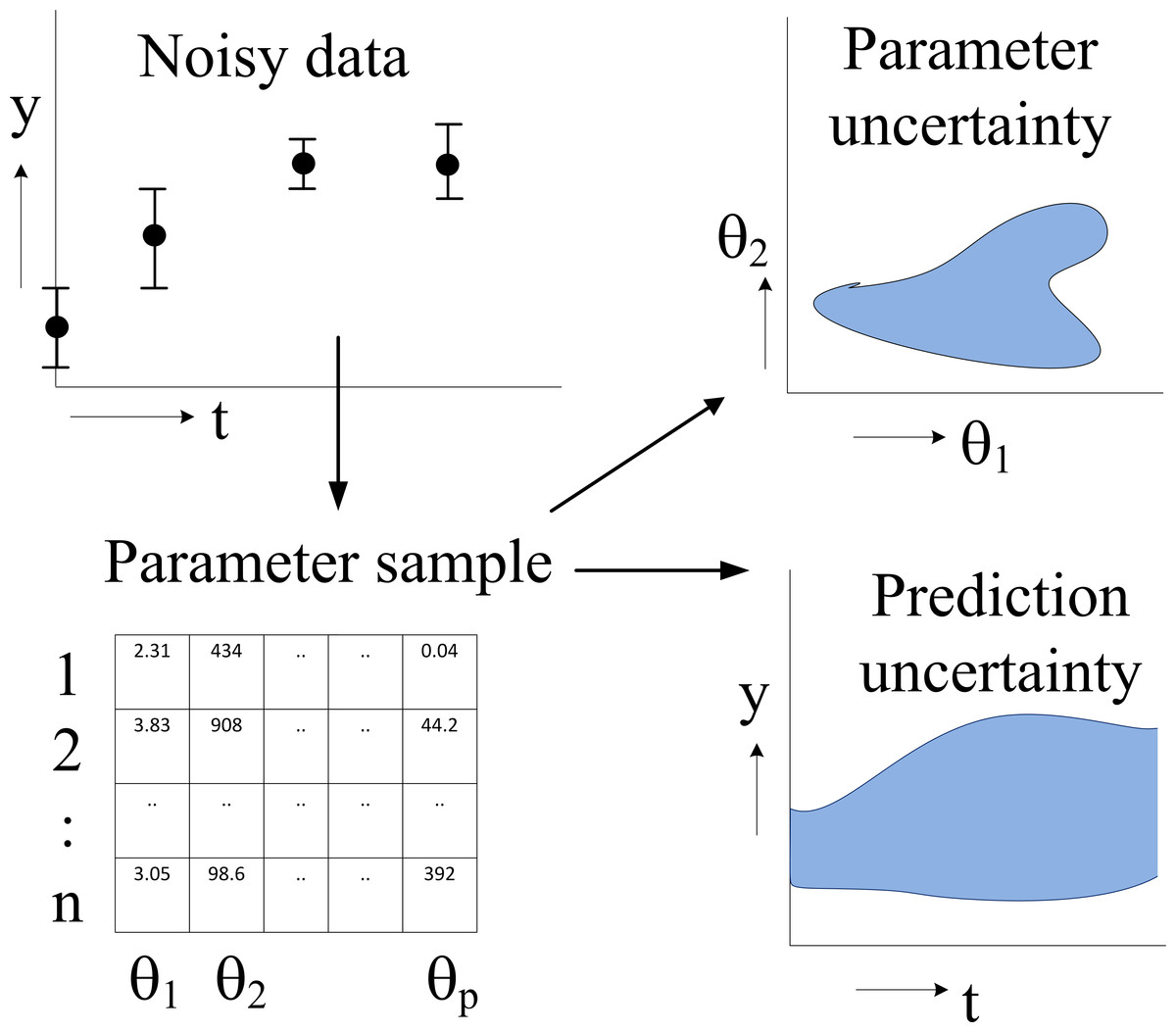

Taking the error ellipse I rescale it such that the graph becomes a tangent. A made up a different measure that seems plausible and should give very similar result. Solving for the Lagrangian, though, seems to be very tedious, while possible of course. If you are interested in this, I could try to make a writeup, but it would not be as clean as above.įollowing a little the case of a linear function I think it might be done similar. One solution is to perform Bayesian inference, using some package like pymc. Here, linear error analysis (as performed by uncertainties) will not work. With n unknown, you really are in some trouble since the problem is non-convex.

# Use normal equations to find coefficients Y = np.array() X = np.array() You could do it like this: import numpy as np Thankfully, linear fitting (under the l2-distance) is simply Linear least squares which admits a closed form solution, requiring only padding the values with ones and then solving the normal equations. If n is fixed, your problem is that the libraries you call do not support uarray, so you have to make a workaround. Hence classical error analysis (such as that used by uncertanities) breaks down and you have to resort to other methods. This is because for a fixed n, your problem admits a closed form solution, while if you let n be free, it does not, and in fact the problem may have multiple solutions. Let me first preface this with this problem being impossible to solve "nicely" given that you want to solve for a, b and n. Y_total_squares = np.sum((y - np.mean(y)) ** 2)Ĭoefficient_determination_on = 1 - (y_residual_squares / y_total_squares) Y_residual_squares = np.sum((x_prediction - y) ** 2) X_prediction = (fit_a_raw / ((x) ** n_correlation)) + fit_b_raw Y = np.array()Ĭoefficient_determination_on = np.empty(shape = (len(n),))įit_a_raw, fit_b_raw = optimize.curve_fit(linear_fit, x_fit, y_fit) How do I fix the following error and determine the uncertainty of the fit parameters (a,b and n)?įrom scipy import optimize, interpolate, spatialįrom scipy.interpolate import UnivariateSpline Minpack.error: Result from function call is not a proper array of But, I am having issues with curve fitting with scipy optimize's curve fit function. I am trying to determine the uncertainty in the fit parameters with this uncertainties package. However, there are certain uncertainties associated with x and y, where x has uncertainty of 50% of x and y has a fixed uncertainty. As long as you handle your uncertainties correctly, too many significant digits (due to intermediate calculation steps or whatnot) become practically irrelevant, since your value cannot be more precise than the size of your uncertainty anyway.I have the following data for x and y: x yįor the above data, I am trying to fit the data in the form: The value of the slope is 0.56 kPa/s, but since the error is 0.9 kPa/s, we should report just a single significant digit, so that makes it 0.6 ± 0.9 kPa/s.Īnd not rounding till the end is not wrong, in fact, it's good practice.

Options(errors.notation="plus-minus", errors.digits=1)

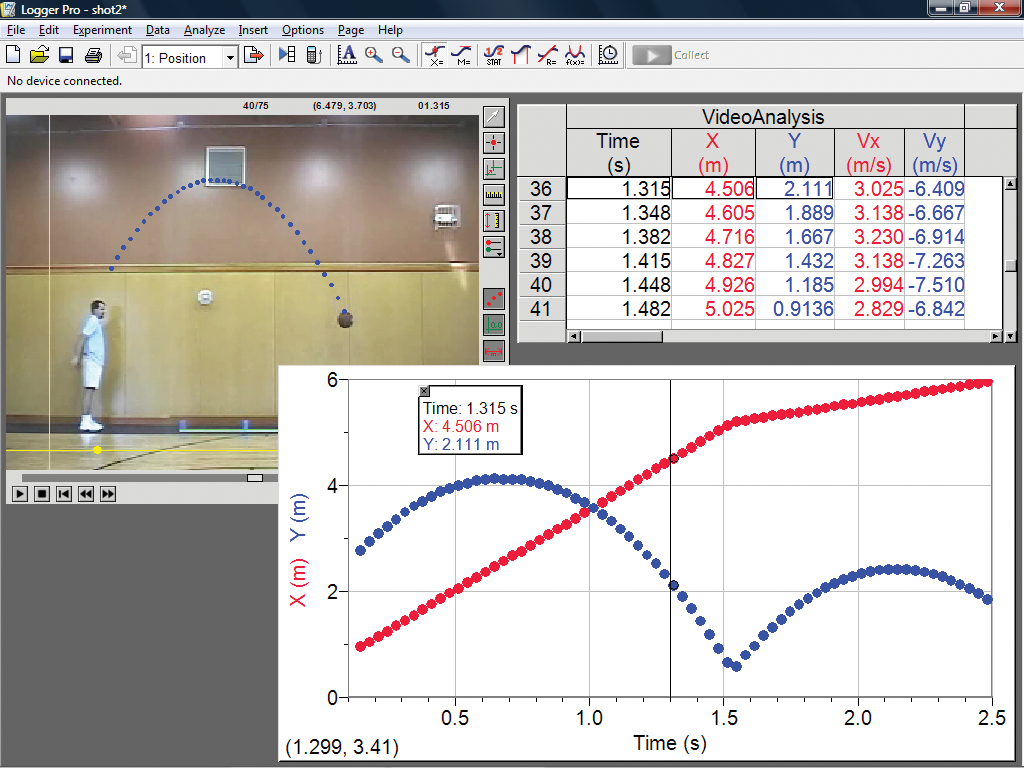

#Logger pro uncertainty fitted parameters how to#

Here's how to do it with R (the code below should be reproducible, assuming you have installed R). Both R and Python (both are freely available, commonly used tools) can handle error propagation. Instead of calculating the error propagation manually (cumbersome, and prone to errors, hehe -) I think it's better to use suitable software. Also, error propagation cannot be done by simply adding up the errors. Note that the sum in your nominator evaluates to a small number with an error of roughly the same size ( 99.73☐.01 - 99.72☐.01 = 0.01☐.01). You have not propagated your errors properly. If one uncertainty is much larger than the others, the approximate uncertainty in the calculated result can be taken as due to that quantity alone. For functions such as addition and subtraction, absolute uncertainties can be added for multiplication, division and powers, percentage uncertainties can be added. I got Vernier Logger Pro to do the tangent and slope calculations for me which is why there are more decimal places than uncertainty. I do not think using percentage uncertainty would make sense here because you could measure the slope with however big or small numbers you wanted, depending on what two points you chose to use. My teacher said that the error for the time was so small that it is negligible (but not a perfect number). I have done an experiment that found the initial reaction rate for a reaction that created gas in $\pu$$

0 kommentar(er)

0 kommentar(er)